Fintechs must respond to a rapidly changing environment by offering innovative products and services that are also compliant with evolving KYC regulations.

For many years, regulation was developed to meet the specific challenges and dangers facing larger, traditional financial institutions. Fintechs, although smaller, manage similar risks of criminal and fraudulent behavior, which is why they are increasingly being subject to the same regulatory oversight.

In February 2022, UK-based fintechs called on the government to overhaul the regulation of fintechs, and create an environment where firms could continue to grow while keeping the market safe.

Whatever changes may happen in the future, complying with KYC and AML regulations will remain key. In Europe, this includes the AMLD (now in its sixth iteration) and eIDAS regulations.

Artificial intelligence and KYC.

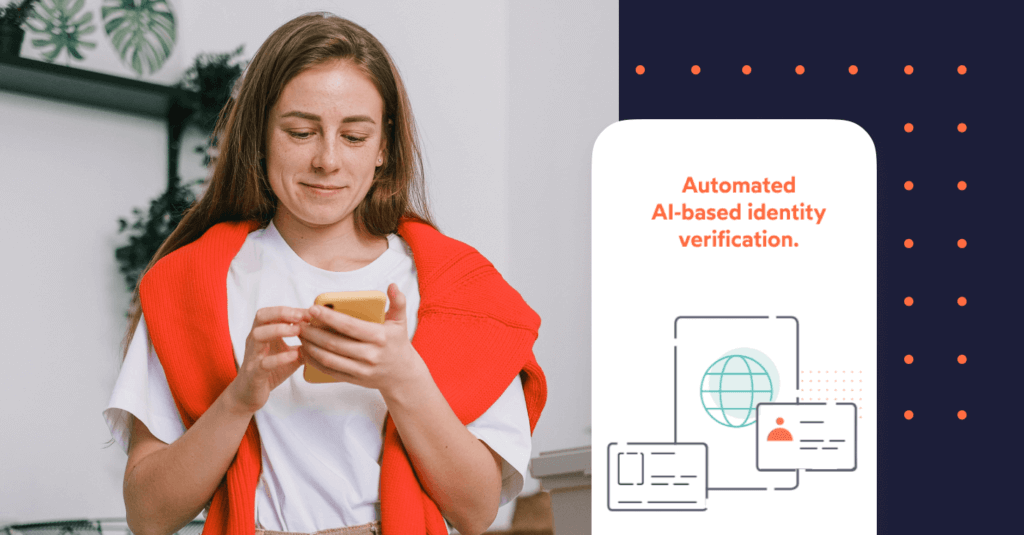

Artificial intelligence is the technical simulation of human intelligence by computers. AI is trained using data sets to think and draw conclusions like a human (this process is referred to as machine learning).This has obvious applications in automated identity verification processes.

AI offers improved accuracy and speed of customer onboarding. It can be used in many areas, including automated identity verification, biometric matching, and ongoing transaction monitoring. Although AML regulations tend to not include technology specifics, many regulators have confirmed the acceptability of AI and machine learning techniques.

EKYC solutions allow fintechs to mitigate regulatory risks at almost any stage of their growth plans. Done right, automated ID verification offers faster and more accurate customer onboarding, which can improve the overall customer experience and conversion.

Challenges of AI technology.

As with any major change, or adoption of new technology, there are challenges and difficulties associated with AI and machine learning. Staying aware of these is key to minimizing the impact. Working with a reliable technology provider will also help.

The first set of challenges relates to the use of AI techniques and machine learning algorithms in general.

Poor data quality or preparation

AI and machine learning algorithms rely on data for training. Access to historical or training data is needed, and results must be checked. Plus, both quality and quantity of data are needed too. This is more of a concern at the start of using a new system or algorithm, since data is limited. Over time and with increased use, the situation improves as more data becomes available.

Avoiding AI bias

Training needs to be carefully managed and checked to avoid AI bias, which could lead to poor results. AI bias refers to when AI is wrongly trained to reflect human biases. This could be due to human trainers or poor data. Facial recognition as part of KYC is one area that is particularly susceptible to this.

Such biases are often not intentional, and can be hard to identify. Methods to avoid bias include preparing truly representative data sets, considering outputs rationally, and reviewing AI results against real data.

Human involvement is still needed

AI can only do so much. Human involvement is needed in both the training phase and in real-time usage. During training, human input guides machine learning in identifying failures such as non-matches or fraud cases. These could be real failures or false positives, and algorithms need to learn from this. Also, remember that KYC and AML constantly evolve to meet new challenges and fraud methods. This means that ongoing updates and training for machine learning algorithms are common.

AI is largely automated when implemented, so human oversight is still needed.

To stay safe and compliant with regulations, adopters of AI need to realize when it does not work. In identity verification, this refers to when AI cannot be sure of an identity. When this happens, these cases must be flagged for human review.

With IDnow’s AutoIdent, cases can be sent for video-based manual review, completed in real-time, and at minimal disturbance to the user. Read more here: Consumer choice for Identity Verification

Managing additional risks introduced by AI and machine learning.

Any new method or process introduces risks, which is undoubtedly the case with AI and machine learning in financial services. These could be in any area, and the unknown element is part of the challenge.

The UK’s Financial Conduct Authority (FCA) carried out a study of this in 2019, involving close to 300 financial institutions. Several risk areas were revealed, but it also highlighted that organizations were well aware of how to address them.

Rather than introducing entirely new risks, the FCA felt that machine learning instead re-enforced already existing risks, which could be managed through appropriate staff training and data validation frameworks.

The risk areas identified included:

- Insufficient training of staff to use systems.

- Risks introduced by the complexity of an AI system and challenges in validating and governance of systems.

- Issues with data quality leading to inaccurate results.

To find out more about the regulatory challenges facing UK fintechs, check out our Fintech Spotlight Interview with David Gyori, CEO of Banking Reports.

Challenges of integrating AI into fintech and KYC solutions.

Integrating AI into the onboarding process is not necessarily straightforward. There are further challenges that are specific to KYC, verification, and onboarding that adopters of AI should be aware of.

Managing customer expectations and experience

People are used to human interaction. The switch to automation needs to be managed against different experiences and expectations in different markets. This is more likely to cause customer frustration in other AI applications, such as chatbots, but fintechs should still be aware of this possibility with AML and KYC.

Integrating KYC into the onboarding process

AI and machine learning algorithms may be invisible to the end-user, but their effect certainly is not. This includes detecting security features in identity documents and performing live biometric facial comparisons.

Done right, this should be seamless to the end customer. Errors or problems could lead to a lack of confidence in security. Failures or slow processes as part of onboarding could lead to customers abandoning the sign up process. These situations are clearly not good for brand, reputation, or for customer conversion rates. Any problems here could worsen as AI becomes more widespread and customer expectations increase.

Staying up to date with regulations

This is a major consideration for RegTech companies. KYC and AML regulations are well defined by FAFT and national regulators. However, these regulations are largely technology neutral. Fintech companies, just like banks, need to stay aware of this and be prepared to justify the technologies used.

More and more national regulators are permitting the use of AI techniques. Countries that allow full AI usage currently include the United Kingdom, France, Spain, Belgium, and Finland. Other European countries permit video-based KYC. Germany has long been one of the most regulated markets in the world, and has recently accepted an element of automation combined with a manual review.

The acceptance of AI is increasing and will eventually pave the way for easier expansion for fintechs. However, until it is fully accepted, fintechs will need to adapt methods and offerings for different markets.

How is AI used in identity verification?

AI has changed the identity verification and onboarding processes for financial institutions. Not long ago, these were entirely manual processes. Customers would visit a branch to verify physical identity documents. This was time-consuming, costly, and frustrating for customers.

Artificial intelligence in identity verification can be used in several ways:

- Checking identity document authenticity. AI can automatically detect and verify security features contained in identity documents.

- Live video biometrics. Identities can be checked using live photos or videos and comparing biometrics with photographs. With training data and human oversight, machine learning algorithms can be trained (and continually improved) in detecting matches.

- Transaction monitoring. KYC and AML are not just one-off checks that need to be carried out when onboarding a customer. Ongoing monitoring of customers and transactions is required. AI can assist here as well.

The benefits of using AI for identity verification.

Fast results.

Automated processes are much faster. AI-based verification can check documents and identities within minutes. Manual intervention can be built into the process and handled quickly.

Lower cost.

Manual KYC verification can be costly – in both time and money. Delays and backlogs can lose customers.

Improved accuracy.

Automated processing limits the possibility of human error or oversight. As AI is trained on datasets, accuracy improves as more data is consumed.

Meets regulatory requirements.

Staying compliant is vital in the heavily regulated financial services sector. AI is permitted by many regulators, with acceptance only increasing. Non-compliance can be very expensive. Check out our ‘2022: The year for fraud, fines, and fintechs’ blog for more information..

Allows for true ongoing monitoring.

AI can monitor activity and transactions continuously and in real time. This would be a vast effort to achieve manually without the use of AI.

Improved customer experience.

Customers want to know their interactions are secure, while also having a fast and friction-free experience.

Increased conversion rates.

Customer conversion rates are critical in online financial services. This is improved with faster onboarding and verification, with customers less likely to abandon the process.

Allows for global expansion.

Having an automated solution that meets appropriate regulatory requirements makes it simpler to expand service into other countries. More and more countries are accepting automated AI solutions as part of KYC.

Regulatory challenges in the fintech Industry.

By

Francisco Martins

Senior Identity Consultant, Financial Sector UK/I at IDnow

Connect with Francisco on LinkedIn